When NetApp ONTAP transfers the config backups to a remote SFTP server, by design it does not delete the old backups which would eventually fill in the disk. This is where the need for this script comes from.

Intro

This post describes setting up a recurring Jenkins job that executes a bash script to manage configuration backup files in a specified backup directory.

The bash script does the following:

- Sets the backup directory path and the maximum number of backup files per hostname.

- Finds all unique hostname patterns in the backup files.

- For each unique hostname pattern, it counts the number of matching backup files.

- If there are more backups than the specified max, it deletes the oldest ones, keeping only the maximum number of the most recent files.

Config Backups Retention Jenkins Project

I created a Freestyle project which runs periodically on a schedule “H 5 * * *” which is daily but anytime between 5 and 6 AM allowing Jenkins to evenly distribute jobs running in that hour.

Tick “Use secret text(s) or file(s)” to add the credentials which will be used to SSH to a remote server where the backups are stored.

Add a build step “Execute Shell” and use the code below.

Full Jenkins/Bash code

this is the execute shell command in the Jenkins job:

- since I am using the AzureDevOps repo, it will download the scripts locally

- and copy clean_backups.sh to the workspace

- Copy the clean_backups.sh file to the remote server

- Make the remote clean_backups.sh file executable

- Execute the clean_backups.sh script on the remote server and capture the output

#!/bin/bash +x

#

echo "Starting Repo sync"

cd /var/lib/jenkins/repo/Infra-DevOps

git fetch --force git@ssh.dev.azure.com:v3/Infra-DevOps

git merge FETCH_HEAD

echo "finished Repo synch"

#

echo "copy latest version of the script(s)"

cd Bash\ Scripts/NetApp/Remove_old_backups

cp -p clean_backups.sh $WORKSPACE

echo "finished transfer"

#

cd $WORKSPACE

#

# Set variables

echo "setting variables"

local_file=$WORKSPACE/clean_backups.sh

remote_file="/home/storageadmin/clean_backups.sh"

remote_host="10.0.0.33"

#

# Ensure sshpass is installed

echo "check if sshpass is installed"

if ! command -v sshpass &> /dev/null; then

echo "sshpass could not be found. Please install it."

exit 1

fi

# Copy the file to the remote server

echo "copy latest version of the script to remote server"

sshpass -p $SSH_password scp -o StrictHostKeyChecking=no "$local_file" "$SSH_username@${remote_host}:${remote_file}"

#

# Make the remote file executable

echo "making the remote file executable"

sshpass -p $SSH_password ssh -o StrictHostKeyChecking=no "$SSH_username@${remote_host}" "chmod +x ${remote_file}"

#

# Execute the script on the remote server and capture output

echo "execute the script on the remote server"

sshpass -p $SSH_password ssh -o StrictHostKeyChecking=no "$SSH_username@${remote_host}" "${remote_file}"

#and clean_backups.sh:

#!/bin/bash

# Configuration

backup_dir="/home/ontap_config_backups"

max_files=3

# Get unique hostname and backup frequencies

unique_patterns=$(find $backup_dir -type f -name "*.7z" | sed -E "s/([^\.]+\.[^\.]+)\.([^\.]+\.[^\.]+.7z)/\1/" | sort | uniq)

# Iterate through each unique pattern and keep only the latest 3 files

for pattern in $unique_patterns; do

file_count=$(find $backup_dir -type f -wholename "${pattern}.*.7z" | wc -l)

# If there are more than max_files, delete the older ones

if [ $file_count -gt $max_files ]; then

files_to_delete=$((file_count - max_files))

find $backup_dir -type f -wholename "${pattern}.*.7z" | sort | head -n $files_to_delete | xargs rm -f

fi

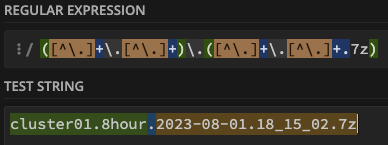

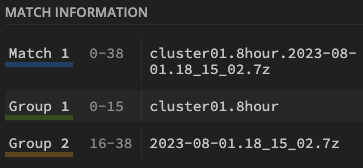

doneAlthough REGEX are beyond the scope of this blog post, I found the following site to be a lifesaver (regex101: build, test, and debug regex) and the expression I created separates the two parts of the backup file name:

So that we can ignore group 2 below and keep only a few of the backups in group 1:

So in summary, this script enforces a retention policy to keep only the 3 most recent configuration backup files for each unique hostname, deleting any older backups. It also helps automatically clean up the backup directory and prevent it from growing out of control.

Link to source on GitHub

The bash script is also available here.